捞一下,附代码

来源:5-11 批归一化实战(2)

战战的坚果

2020-04-05

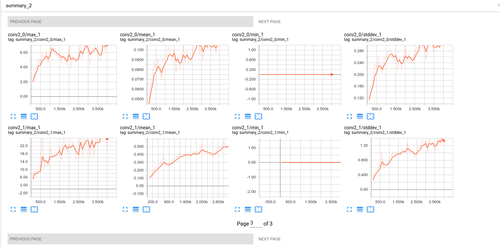

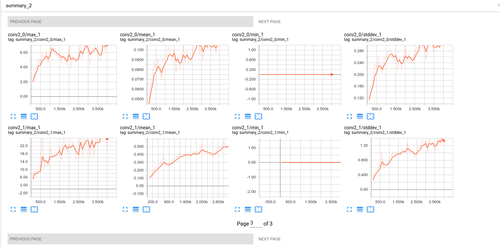

在tensorboard显示出来各个层的信息,但是它在显示完conv0,conv0_0, conv0_1,conv1_0,conv1_1,conv1_2,conv2_0,conv2_1,后,还要显示key_1,key_7,key。接下来粘上代码:

```

import tensorflow as tf

import os

import pickle

import numpy as np

CIFAR_DIR = "./cifar-10-batches-py"

print(os.listdir(CIFAR_DIR))

def load_data(filename):

"""read data from data file."""

with open(filename, 'rb') as f:

data = pickle.load(f, encoding='bytes')

return data[b'data'], data[b'labels']

# tensorflow.Dataset.

class CifarData:

def __init__(self, filenames, need_shuffle):

all_data = []

all_labels = []

for filename in filenames:

data, labels = load_data(filename)

all_data.append(data)

all_labels.append(labels)

self._data = np.vstack(all_data)

self._data = self._data

self._labels = np.hstack(all_labels)

print(self._data.shape)

print(self._labels.shape)

self._num_examples = self._data.shape[0]

self._need_shuffle = need_shuffle

self._indicator = 0

if self._need_shuffle:

self._shuffle_data()

def _shuffle_data(self):

# [0,1,2,3,4,5] -> [5,3,2,4,0,1]

p = np.random.permutation(self._num_examples)

self._data = self._data[p]

self._labels = self._labels[p]

def next_batch(self, batch_size):

"""return batch_size examples as a batch."""

end_indicator = self._indicator + batch_size

if end_indicator > self._num_examples:

if self._need_shuffle:

self._shuffle_data()

self._indicator = 0

end_indicator = batch_size

else:

raise Exception("have no more examples")

if end_indicator > self._num_examples:

raise Exception("batch size is larger than all examples")

batch_data = self._data[self._indicator: end_indicator]

batch_labels = self._labels[self._indicator: end_indicator]

self._indicator = end_indicator

return batch_data, batch_labels

train_filenames = [os.path.join(CIFAR_DIR, 'data_batch_%d' % i) for i in range(1, 6)]

test_filenames = [os.path.join(CIFAR_DIR, 'test_batch')]

train_data = CifarData(train_filenames, True)

test_data = CifarData(test_filenames, False)

def conv_wrapper(inputs,

name,

is_training,

output_channel,

strides = (1,1),

trainable = True,

kernel_size = (3,3),

activation = tf.nn.relu,

padding = 'same',

kernel_initializer = None):

with tf.name_scope(name):

conv2d = tf.layers.conv2d(inputs,

output_channel,

kernel_size,

strides = strides,

padding = padding,

activation = None,

name = name + '/conv2d')

bn = tf.layers.batch_normalization(conv2d,

training = is_training)

return activation(bn)

def pooling_wrapper(inputs,

name,

kernel_size = (2,2),

strides = (2,2),

padding = 'valid'):

return tf.layers.average_pooling2d(inputs,

kernel_size,

strides,

name = name,

padding = padding)

#将残差连接块抽象成函数

def residual_block(x, output_channel):

"""residual connection implementation"""

input_channel = x.get_shape().as_list()[-1]

if input_channel * 2 == output_channel:

increase_dim = True

strides = (2, 2)

elif input_channel == output_channel:

increase_dim = False

strides = (1, 1)

else:

raise Exception("input channel can't match output channel")

conv1 = conv_wrapper(x,'conv1',is_training, output_channel, strides)

conv2 = conv_wrapper(conv1,'conv2',is_training, output_channel)

if increase_dim:

#[None, image_width, image_height, channel] -> [,,,channel*2]

#pooled_x = tf.layers.average_pooling2d(x,'pooled_x',(2,2),(2,2), 'valid')

pooled_x = tf.layers.average_pooling2d(x,

(2, 2),#kernel size

(2, 2),#stride,

padding = 'valid')

#做一个padding,padding在通道上

padded_x = tf.pad(pooled_x,

[[0,0],

[0,0],

[0,0],

[input_channel // 2, input_channel // 2]])

else:

padded_x = x

output_x = conv2 + padded_x

return output_x

#res_net(x_image, [2,3,2], 32, 10)

def res_net(x,

num_residual_blocks,

#num_subsampling,

num_filter_base,

class_num):

# residual network implementation

# Args:

# -num_residual_blocks定义每一层上有多少个残差连接块,eg:[3,4,6,3]

# -num_subsampling定义需要做多少次降采样,eg:4

# -num_filter_base:通道数目的base,即:最初的通道数目,

# -class__num:泛化,可以适应多种类别数目不同的数据集

num_subsampling = len(num_residual_blocks)

layers = []

layer_dict = {}

# x:[None, width, height, channel] -> [width, height, channel]

input_size = x.get_shape().as_list()[1:]

# 定义一个命名空间,在这个空间下定义的变量,

# 它们的名字就会是conv0/xxxx,这样可以有效的防止命名冲突。

with tf.variable_scope('conv0'):

conv0 = conv_wrapper(x,'conv0',is_training, num_filter_base,(1,1), False)

layers.append(conv0)

layer_dict['conv0'] = conv0

# eg:num_subsampling = 3, sample_id = [0,1,2]

for sample_id in range(num_subsampling):

for i in range(num_residual_blocks[sample_id]):

with tf.variable_scope("conv%d_%d" % (sample_id, i)):

conv = residual_block(

layers[-1],

num_filter_base * (2 ** sample_id))

layers.append(conv)

layer_dict[ 'conv%d_%d'% (sample_id, i)] = conv

multiplier = 2 ** (num_subsampling - 1)

assert layers[-1].get_shape().as_list()[1:] \n == [input_size[0] / multiplier,

input_size[1] / multiplier,

num_filter_base * multiplier]

with tf.variable_scope('fc'):

# layer[-1].shape : [None, width, height, channel]

# kernal_size: image_width, image_height

#将神经元图从二维的图变成一个像素点。一个值,就是它的均值。

global_pool = tf.reduce_mean(layers[-1], [1,2])

logits = tf.layers.dense(global_pool, class_num)

layers.append(logits)

return layers[-1],layer_dict

x = tf.placeholder(tf.float32, [None, 3072])

y = tf.placeholder(tf.int64, [None])

is_training = tf.placeholder(tf.bool, []) #它只有1个值,它的size就是没有size.

# [None], eg: [0,5,6,3]

x_image = tf.reshape(x, [-1, 3, 32, 32])

# 32*32

x_image = tf.transpose(x_image, perm=[0, 2, 3, 1])

data_aug_1 = tf.image.random_flip_left_right(x_image)

data_aug_2 = tf.image.random_brightness(data_aug_1, max_delta = 63)

data_aug_3 = tf.image.random_contrast(

data_aug_2, lower = 0.2, upper = 1.8)

normal_result_x_images = data_aug_3 / 127.5 - 1

y_,layer_dict = res_net(normal_result_x_images, [2,2,2,2], 64, 10)

print("*****************")

print(layer_dict)

print("*****************")

loss = tf.losses.sparse_softmax_cross_entropy(labels=y, logits=y_)

# y_ -> sofmax

# y -> one_hot

# loss = ylogy_

# indices

predict = tf.argmax(y_, 1)

# [1,0,1,1,1,0,0,0]

correct_prediction = tf.equal(predict, y)

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float64))

# with tf.name_scope('train_op'):

# train_op = tf.train.AdamOptimizer(1e-3).minimize(loss)

with tf.name_scope('train_op'):

# train_op = tf.train.AdamOptimizer(1e-3).minimize(loss)

optimizer = tf.train.AdamOptimizer(1e-3)

update_ops = tf.get_collection(tf.GraphKeys.UPDATE_OPS)

# 有两个方案使用batch_normalization,第一种如下面的代码使用control dependencies,

# 第二种是不使用control_dependencies, 但在下面训练代码中调sess.run的时候,把update_ops也加进去,即

# sess.run([train_op, update_ops, ..], feed_dict = ..)

with tf.control_dependencies(update_ops):

train_op = optimizer.minimize(loss)

def variable_summary(var, name):

with tf.name_scope(name):

mean = tf.reduce_mean(var)

with tf.name_scope('stddev'):

stddev = tf.sqrt(tf.reduce_mean(tf.square(var - mean)))

tf.summary.scalar('mean', mean)

tf.summary.scalar('stddev', stddev)

tf.summary.scalar('min', tf.reduce_min(var))

tf.summary.scalar('max', tf.reduce_max(var))

tf.summary.histogram('histogram', var)

with tf.name_scope('summary'):

for key,value in layer_dict.items():

variable_summary(value, key)

loss_summary = tf.summary.scalar('loss', loss)

# 'loss': <10, 1.1>, <20, 1.08>

accuracy_summary = tf.summary.scalar('accuracy', accuracy)

#source_image = (x_image + 1) * 127.5

inputs_summary = tf.summary.image('inputs_image', data_aug_3)

merged_summary = tf.summary.merge_all() #train

merged_summary_test = tf.summary.merge([loss_summary, accuracy_summary]) #test

#训练时关注的东西会多一些,测试可能只关注accuracy

#为tensorboard只需要指定文件夹即可,会自动命名文件。

LOG_DIR = '.'

run_label = './run_resnet_tensorboard'

run_dir = os.path.join(LOG_DIR, run_label)

if not os.path.exists(run_dir):

os.mkdir(run_dir)

train_log_dir = os.path.join(run_dir, 'train')

test_log_dir = os.path.join(run_dir, 'test')

if not os.path.exists(train_log_dir):

os.mkdir(train_log_dir)

if not os.path.exists(test_log_dir):

os.mkdir(test_log_dir)

model_dir = os.path.join(run_dir, 'model')

if not os.path.exists(model_dir):

os.mkdir(model_dir)

saver = tf.train.Saver() #默认只保留最近5次的模型

model_name = 'ckp-10000' #指定恢复的checkpoint的名字

model_path = os.path.join(model_dir, model_name)

init = tf.global_variables_initializer()

batch_size = 20

train_steps = 100000

test_steps = 100

output_model_every_steps = 100 #每100步保存一次模型

output_summary_every_steps = 100 #每100次计算一次summary

# train 10k: 73.4%

with tf.Session() as sess:

sess.run(init)

train_writer = tf.summary.FileWriter(train_log_dir, sess.graph) #是否指定计算图

test_writer = tf.summary.FileWriter(test_log_dir)

fixed_test_batch_data, fixed_test_batch_labels \n = test_data.next_batch(batch_size)

if os.path.exists(model_path + '.index'):

saver.restore(sess, model_path)

print('model restored from %s' % model_path)

else:

print('model %s does not exist' % model_path)

for i in range(train_steps):

batch_data, batch_labels = train_data.next_batch(batch_size)

eval_ops = [loss, accuracy, train_op]

should_output_summary = ((i+1) % output_summary_every_steps == 0)

if should_output_summary:

eval_ops.append(merged_summary)

eval_ops_results = sess.run(

eval_ops, #可能3、4,是一个变长值

feed_dict={

x: batch_data,

y: batch_labels,

is_training: True

})

loss_val, acc_val = eval_ops_results[0:2]

if should_output_summary:

train_summary_str = eval_ops_results[-1]

train_writer.add_summary(train_summary_str, i+1) #指定是在第几步输出的

#列表中存储的是merged_summary_test的值,即[merged_summary_test_value],

#取第0个是为了从列表中把元素取出来

test_summary_str = sess.run([merged_summary_test],

feed_dict={

x: fixed_test_batch_data,

y: fixed_test_batch_labels,

is_training: False,

})[0]

test_writer.add_summary(test_summary_str, i+1)

if (i+1) % 100 == 0:

print('[Train] Step: %d, loss: %4.5f, acc: %4.5f'

% (i+1, loss_val, acc_val))

if (i+1) % 1000 == 0:

test_data = CifarData(test_filenames, False)

all_test_acc_val = []

for j in range(test_steps):

test_batch_data, test_batch_labels \n = test_data.next_batch(batch_size)

test_acc_val = sess.run(

[accuracy],

feed_dict = {

x: test_batch_data,

y: test_batch_labels,

is_training: False,

})

all_test_acc_val.append(test_acc_val)

test_acc = np.mean(all_test_acc_val)

print('[Test ] Step: %d, acc: %4.5f' % (i+1, test_acc))

if (i+1) % output_model_every_steps == 0:

saver.save(sess,

os.path.join(model_dir, 'ckp-%05d' % (i+1)))

print('model saved to ckp-%05d' % (i+1))

```

写回答

1回答

-

正十七

2020-04-08

同学你好,我按照你的改动改了代码,没有出现这个问题啊。感觉是环境的问题或者是多次运行没有清空文件夹?

我的代码:

def variable_summary(var, name): """Constructs summary for statistics of a variable""" with tf.name_scope(name): mean = tf.reduce_mean(var) with tf.name_scope('stddev'): stddev = tf.sqrt(tf.reduce_mean(tf.square(var - mean))) tf.summary.scalar('mean', mean) tf.summary.scalar('stddev', stddev) tf.summary.scalar('min', tf.reduce_min(var)) tf.summary.scalar('max', tf.reduce_max(var)) tf.summary.histogram('histogram', var) with tf.name_scope('summary'): for key,value in layers_dict.items(): variable_summary(value, key) loss_summary = tf.summary.scalar('loss', loss) # 'loss': <10, 1.1>, <20, 1.08> accuracy_summary = tf.summary.scalar('accuracy', accuracy) merged_summary = tf.summary.merge_all() #train merged_summary_test = tf.summary.merge([loss_summary, accuracy_summary]) #test LOG_DIR = '.' run_label = './run_resnet' run_dir = os.path.join(LOG_DIR, run_label) if not os.path.exists(run_dir): os.mkdir(run_dir) train_log_dir = os.path.join(run_dir, 'train') test_log_dir = os.path.join(run_dir, 'test') if not os.path.exists(train_log_dir): os.mkdir(train_log_dir) if not os.path.exists(test_log_dir): os.mkdir(test_log_dir)init = tf.global_variables_initializer() batch_size = 20 train_steps = 10000 test_steps = 100 output_summary_every_steps = 100 # train 10k: 74.85% with tf.Session() as sess: sess.run(init) train_writer = tf.summary.FileWriter(train_log_dir, sess.graph) test_writer = tf.summary.FileWriter(test_log_dir) fixed_test_batch_data, fixed_test_batch_labels \ = test_data.next_batch(batch_size) for i in range(train_steps): batch_data, batch_labels = train_data.next_batch(batch_size) eval_ops = [loss, accuracy, train_op] should_output_summary = ((i+1) % output_summary_every_steps == 0) if should_output_summary: eval_ops.append(merged_summary) eval_ops_results = sess.run( eval_ops, feed_dict={ x: batch_data, y: batch_labels}) loss_val, acc_val = eval_ops_results[0:2] if should_output_summary: train_summary_str = eval_ops_results[-1] train_writer.add_summary(train_summary_str, i+1) test_summary_str = sess.run([merged_summary_test], feed_dict={ x: fixed_test_batch_data, y: fixed_test_batch_labels, })[0] test_writer.add_summary(test_summary_str, i+1) if (i+1) % 100 == 0: print('[Train] Step: %d, loss: %4.5f, acc: %4.5f' % (i+1, loss_val, acc_val)) if (i+1) % 1000 == 0: test_data = CifarData(test_filenames, False) all_test_acc_val = [] for j in range(test_steps): test_batch_data, test_batch_labels \ = test_data.next_batch(batch_size) test_acc_val = sess.run( [accuracy], feed_dict = { x: test_batch_data, y: test_batch_labels }) all_test_acc_val.append(test_acc_val) test_acc = np.mean(all_test_acc_val) print('[Test ] Step: %d, acc: %4.5f' % (i+1, test_acc))resnet那块跟你改的一样。

00

相似问题