一个关于“SSLError”的问题

来源:6-2 requests功能详解

weixin_慕勒4383646

2019-08-03

import re

import requests

from scrapy import Selector

from urllib import parse

from datetime import datetime

from request_spider.models import *

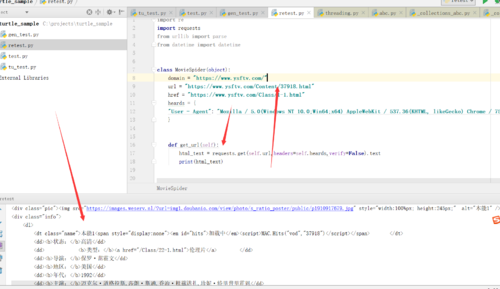

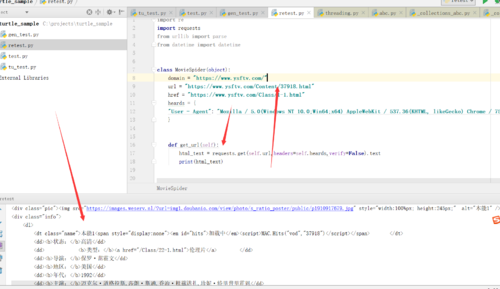

class MovieSpider(object):

domain = "https://www.ysftv.com/"

url = "https://www.ysftv.com/Content/37918.html"

href = "https://www.ysftv.com/Class/1-1.html"

heards = {

“User - Agent”: “Mozilla / 5.0(Windows NT 10.0;Win64;x64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome / 75.0.3770.142 Safari / 537.36”

}

def parse_tail(self,url,image):

print("开始解析详情页 :" + url)

movie = Movie()

id_text = url.split("/")[-1]

movie_id = int(re.search("(\d*)",id_text).group())

tail_text = requests.get(url,headers=self.heards).text

tail_res = Selector(text=tail_text)

info = tail_res.xpath("//div[@class='content mb clearfix']/div[@class='info']")

name = info.css("dl > dt.name::text").extract_first('None')

status = info.xpath("./dl/dd[1]/text()").extract_first('None')

type = info.xpath("./dl/dd[2]/a/text()").extract_first('None')

director = info.xpath("./dl/dd[3]/text()").extract_first('None')

area = info.xpath("./dl/dd[4]/text()").extract_first('None')

create_year = info.xpath("./dl/dd[5]/text()").extract_first('None')

actors = info.xpath("./dl/dd[6]/text()").extract_first('None')

descript = info.css("dl > dt.desd > div.alldes > div.des2::text").extract_first()

play1 = []

play1_divs = tail_res.xpath("//div[@id='stab_1_71']")

for div in play1_divs:

play1_url = div.xpath(".//a/@href").extract_first()

if play1_url:

play1_url = parse.urljoin(self.domain, play1_url)

play1.append(play1_url)

play_channel1 = "&".join( play1)

play2 = []

play2_divs = tail_res.xpath("//div[@id='stab_2_71']")

for div in play2_divs:

play2_url = div.xpath(".//a/@href").extract_first()

if play2_url:

play2_url = parse.urljoin(self.domain, play2_url)

play2.append(play2_url)

play_channel2 = "&".join(play2)

play3 = []

play3_divs = tail_res.xpath("//div[@id='stab_3_71']")

for div in play3_divs:

play3_url = div.xpath(".//a/@href").extract_first()

if play3_url:

play3_url = parse.urljoin(self.domain, play3_url)

play3.append(play3_url)

play_channel3 = "&".join(play3)

play4 = []

play4_divs = tail_res.xpath("//div[@id='stab_4_71']")

for div in play4_divs:

play4_url = div.xpath(".//a/@href").extract_first()

if play4_url:

play4_url = parse.urljoin(self.domain, play4_url)

play4.append(play4_url)

play_channel4 = "&".join(play4)

movie.Movie_Id = movie_id

movie.Movie_Image = image

movie.Name = name

movie.Status = status

movie.Type = type

movie.Director = director

movie.Area = area

movie.Creat_Year = create_year

movie.Actors = actors

movie.Descript = descript

movie.Paly_Channel1 = play_channel1

movie.Paly_Channel2 = play_channel2

movie.Paly_Channel3 = play_channel3

movie.Paly_Channel4 = play_channel4

existed_movie = Movie.select().where(Movie.Movie_Id == movie.Movie_Id )

if existed_movie:

movie.save()

else:

movie.save(force_insert=True)

def parse_topic(self,href):

print("开始解析主题 :" + href)

topic_text = requests.get(href,headers=self.heards,verify=False).text

topic_res = Selector(text=topic_text)

topic_lis = topic_res.xpath("//div[@class='index-tj mb clearfix']/ul/li")

for li in topic_lis:

movie_image = li.xpath("./a[@class='li-hv']/div[@class='img']/img/@data-original").extract_first("None")

tail_url = li.xpath("./a[@class='li-hv']/@href").extract_first("None")

tail_url = parse.urljoin(self.domain,tail_url)

self.parse_tail(tail_url,movie_image)

next_page = topic_res.xpath("//div[@class='page mb clearfix']/a[contains(text(),'下一页')]/@href").extract()[0]

if next_page:

next_page = parse.urljoin(self.domain,next_page)

self.parse_topic(next_page)

def get_url(self):

html_text = requests.get(self.domain,headers=self.heards,verify=False).text

res = Selector(text=html_text)

all_lis = res.css(".nav-pc > li")

for li in all_lis[1:-1]:

href = li.xpath("./b[@class='navb']/a/@href").extract_first("None")

href = parse.urljoin(self.domain,href)

self.parse_topic(href)

if name == “main”:

spider = MovieSpider()

spider.get_url()

models的代码:

`import MySQLdb

from peewee import *

db = MySQLDatabase(“movie”, host=“127.0.0.1”, port=3306, user=“root”, password="")

class BaseModel(Model):

class Meta:

database = db

class Movie(BaseModel):

Movie_Id = IntegerField(primary_key=True)

Movie_Image = CharField(null=True)

Name = TextField(null=True)

Status = CharField(max_length=20,null=True)

Type = CharField(max_length=30,null=True)

Director = TextField(null=True)

Area = CharField(max_length=20,null=True)

Creat_Year = CharField(max_length=10,null=True)

Actors = TextField(null=True)

Descript = TextField(null=True)

Paly_Channel1 = TextField(null=True)

Paly_Channel2 = TextField(null=True)

Paly_Channel3 = TextField(null=True)

Paly_Channel4 = TextField(null=True)

if name == “main”:

db.create_tables([Movie])``

Bobby老师:

这是我最近练习爬取静态网页写的的一个·爬虫,但运行多次都出现如下错误:

requests.exceptions.SSLError: HTTPSConnectionPool(host=‘www.ysftv.com’, port=443): Max retries exceeded with url: /Content/65596.html (Caused by SSLError(SSLError(“bad handshake: SysCallError(10054, ‘WSAECONNRESET’)”)))

百度了一下也没找到问题的解释,用了网上的解决办法将requests的verify改为等于false( tail_text = requests.get(url,headers=self.heards,verify=False).text)但还是出现上述问题,学生想请老师指点一下:

1、这到底是个什么Error?

2、这个问题是怎么引起的?

3、这个问题该如何解决?

1回答

-

bobby

2019-08-05

看你的报错应该是请求某个url的时候报错了

我刚才试了一下直接请求这个url没有报错 你试试直接请求这个url呢032019-08-20

我刚才试了一下直接请求这个url没有报错 你试试直接请求这个url呢032019-08-20

相似问题

我刚才试了一下直接请求这个url没有报错 你试试直接请求这个url呢

我刚才试了一下直接请求这个url没有报错 你试试直接请求这个url呢