Missing argument grant_type

来源:9-1 selenium动态网页请求与模拟登录知乎

lemonlxn

2018-08-02

老师好,按照您最想的视频代码,我试着写了一下,账号密码都能正确输入,但点击登陆的时候,网页出现Missing argument grant_type 这个提示。尽管我尝试手动点击,依旧出现 Missing argument grant_type

10回答

-

python_me

2018-08-06

ubuntu系统完美解决:

1. chrome浏览器降到60版本,下载地址: https://www.slimjet.com/chrome/download-chrome.php?file=lnx%2Fchrome64_60.0.3112.90.deb

2. chromedriver使用2.33的版本,下载地址: https://chromedriver.storage.googleapis.com/2.33/chromedriver_linux64.zip

222018-11-03 -

python_me

2018-08-06

同样的问题 求支援 代码

00 -

w84422

2018-08-04

知道访问主页报400的原因了,return [scrapy.Request(url=self.start_urls[0], dont_filter=True, cookies=cookie_dict)],是这行代码中没有 headers = XXX,加上就能正常访问了。

综上所述,报Missing argument grant_type错误,是浏览器的版本自动升级为最高版本导致,把Firefox降到57.0的版本,插件使用geckodriver-v0.21.0-win64,问题解决

012018-08-06 -

w84422

2018-08-04

我把Firefox降到57.0的版本,不报Missing argument grant_type了,但是return [scrapy.Request(url=self.start_urls[0], dont_filter=True, cookies=cookie_dict)]后,打开'https://www.zhihu.com/'时,返回400错误,并没有进入到def parse(self, response):方法

00 -

heipepper

2018-08-04

情况是这样的,前两天使用selenium和Chrome driver登陆还可以成功的,到了写入数据库那一步的时候,模拟登陆就出现Missing argument grant_type这个错误,然后我下载了Firefox浏览器插件也不行,同样的错误。

042018-08-07 -

heipepper

2018-08-04

同样的问题

00 -

qq_马与草原_04174498

2018-08-04

你搞好了吗,我也是同样的问题。。

032018-08-07 -

bobby

2018-08-03

# -*- coding: utf-8 -*- import re import json import datetime try: import urlparse as parse except: from urllib import parse import scrapy from scrapy.loader import ItemLoader from items import ZhihuQuestionItem, ZhihuAnswerItem class ZhihuSpider(scrapy.Spider): name = "zhihu_sel" allowed_domains = ["www.zhihu.com"] start_urls = ['https://www.zhihu.com/'] #question的第一页answer的请求url start_answer_url = "https://www.zhihu.com/api/v4/questions/{0}/answers?sort_by=default&include=data%5B%2A%5D.is_normal%2Cis_sticky%2Ccollapsed_by%2Csuggest_edit%2Ccomment_count%2Ccollapsed_counts%2Creviewing_comments_count%2Ccan_comment%2Ccontent%2Ceditable_content%2Cvoteup_count%2Creshipment_settings%2Ccomment_permission%2Cmark_infos%2Ccreated_time%2Cupdated_time%2Crelationship.is_author%2Cvoting%2Cis_thanked%2Cis_nothelp%2Cupvoted_followees%3Bdata%5B%2A%5D.author.is_blocking%2Cis_blocked%2Cis_followed%2Cvoteup_count%2Cmessage_thread_token%2Cbadge%5B%3F%28type%3Dbest_answerer%29%5D.topics&limit={1}&offset={2}" headers = { "HOST": "www.zhihu.com", "Referer": "https://www.zhizhu.com", 'User-Agent': "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:51.0) Gecko/20100101 Firefox/51.0" } custom_settings = { "COOKIES_ENABLED": True } def parse(self, response): """ 提取出html页面中的所有url 并跟踪这些url进行一步爬取 如果提取的url中格式为 /question/xxx 就下载之后直接进入解析函数 """ pass def parse_question(self, response): #处理question页面, 从页面中提取出具体的question item pass def parse_answer(self, reponse): pass def start_requests(self): from selenium import webdriver browser = webdriver.Chrome(executable_path="E:/tmp/chromedriver.exe") browser.get("https://www.zhihu.com/signin") browser.find_element_by_css_selector(".SignFlow-accountInput.Input-wrapper input").send_keys( "xxx") browser.find_element_by_css_selector(".SignFlow-password input").send_keys( "xxx") browser.find_element_by_css_selector( ".Button.SignFlow-submitButton").click() import time time.sleep(10) Cookies = browser.get_cookies() print(Cookies) cookie_dict = {} import pickle for cookie in Cookies: # 写入文件 f = open('H:/scrapy/ArticleSpider/cookies/zhihu/' + cookie['name'] + '.zhihu', 'wb') pickle.dump(cookie, f) f.close() cookie_dict[cookie['name']] = cookie['value'] browser.close() return [scrapy.Request(url=self.start_urls[0], dont_filter=True, cookies=cookie_dict)]你把这个代码拷贝过去运行试试 我这里刚才运行了没有问题

042018-08-07 -

lemonlxn

提问者

2018-08-03

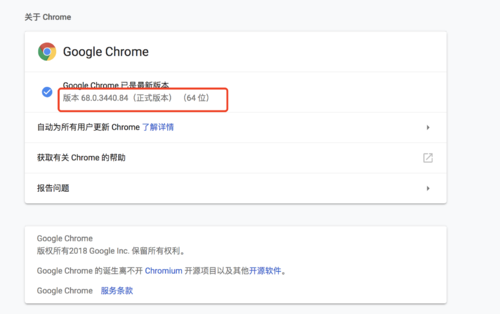

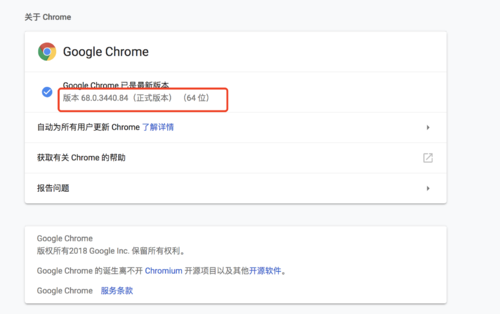

老师好,我的谷歌版本是最新的。

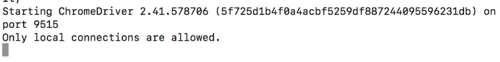

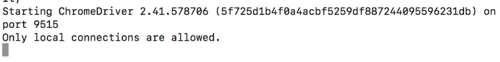

Chromedriver也是七月最新的2.41版本,还是出现

Missing argument grant_type问题

00

00 -

bobby

2018-08-03

你用自己的浏览器访问有问题吗? 或者使用最新的chromedriver试试呢

042018-10-13

相似问题