将loader传递给meta时,scrapy-redis会报错

来源:10-4 scrapy-redis编写分布式爬虫代码

ErogenousMonstar

2019-12-24

错误信息:

2019-12-24 19:17:20 [twisted] CRITICAL:

Traceback (most recent call last):

File "C:\Users\Tommy\Envs\py36x64\lib\site-packages\twisted\internet\task.py", line 517, in _oneWorkUnit

result = next(self._iterator)

File "C:\Users\Tommy\Envs\py36x64\lib\site-packages\scrapy\utils\defer.py", line 63, in <genexpr>

work = (callable(elem, *args, **named) for elem in iterable)

File "C:\Users\Tommy\Envs\py36x64\lib\site-packages\scrapy\core\scraper.py", line 184, in _process_spidermw_output

self.crawler.engine.crawl(request=output, spider=spider)

File "C:\Users\Tommy\Envs\py36x64\lib\site-packages\scrapy\core\engine.py", line 210, in crawl

self.schedule(request, spider)

File "C:\Users\Tommy\Envs\py36x64\lib\site-packages\scrapy\core\engine.py", line 216, in schedule

if not self.slot.scheduler.enqueue_request(request):

File "C:\Users\Tommy\Envs\py36x64\lib\site-packages\scrapy_redis\scheduler.py", line 167, in enqueue_request

self.queue.push(request)

File "C:\Users\Tommy\Envs\py36x64\lib\site-packages\scrapy_redis\queue.py", line 99, in push

data = self._encode_request(request)

File "C:\Users\Tommy\Envs\py36x64\lib\site-packages\scrapy_redis\queue.py", line 43, in _encode_request

return self.serializer.dumps(obj)

File "C:\Users\Tommy\Envs\py36x64\lib\site-packages\scrapy_redis\picklecompat.py", line 14, in dumps

return pickle.dumps(obj, protocol=-1)

File "C:\Users\Tommy\Envs\py36x64\lib\site-packages\parsel\selector.py", line 204, in __getstate__

raise TypeError("can't pickle Selector objects")

TypeError: can't pickle Selector objects

请问老师知道这个怎么解决吗,我搜了好久没搜到,谢谢老师

写回答

3回答

-

bobby

2019-12-25

你是不是yield了一个selector对象而不是request对象?

132019-12-27 -

bobby

2019-12-29

这里的报错应该是说这request中把selector加入进去会报错,你可以尝试将html加入进去,然后到另一个函数的时候使用selector重新通过这个html实例化一下00

这里的报错应该是说这request中把selector加入进去会报错,你可以尝试将html加入进去,然后到另一个函数的时候使用selector重新通过这个html实例化一下00 -

ErogenousMonstar

提问者

2019-12-27

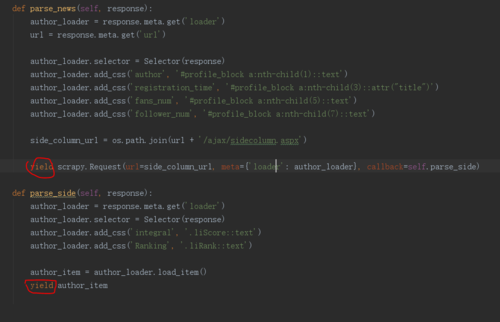

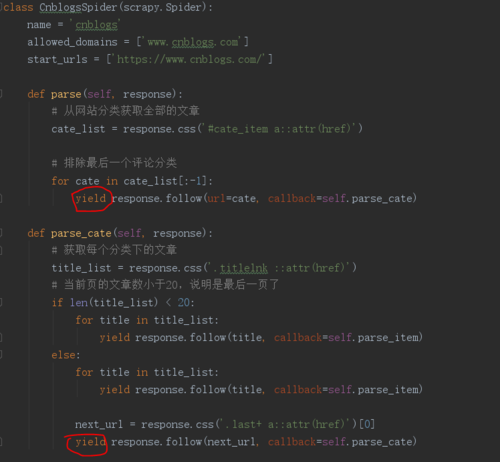

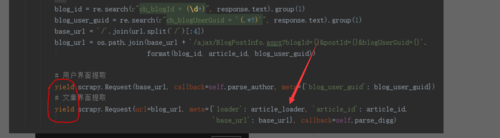

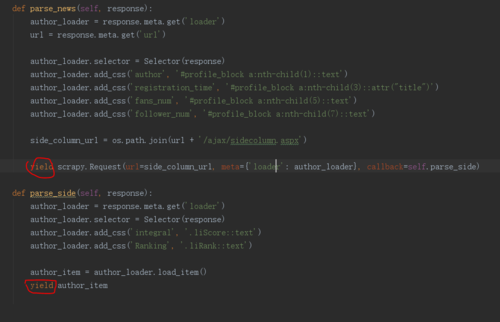

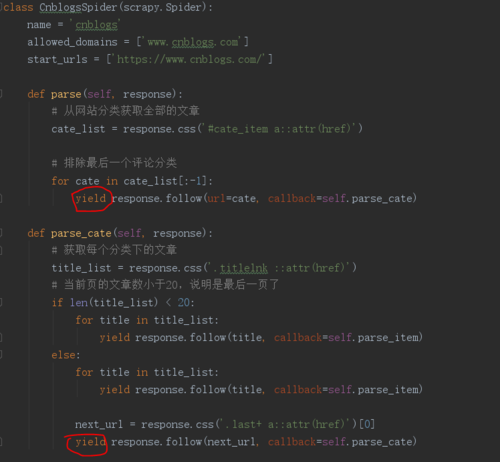

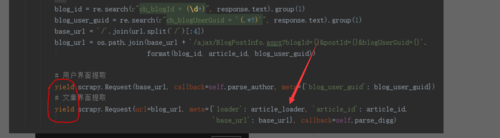

下面是我spider.py的所有截图,老师也可以去我github里直接看:https://github.com/ErogenousMonster/spider_items/blob/master/S01_Cnblogs/S01_Cnblogs/spiders/cnblogs.py

初步怀疑是我在使用loader传递时又赋值了新的selector给loader: author_loader.selector = Selector(response)

,所以出错,然后我将类似的代码注释掉,只传递loader,还是报同样的错。

00

00

相似问题

这里的报错应该是说这request中把selector加入进去会报错,你可以尝试将html加入进去,然后到另一个函数的时候使用selector重新通过这个html实例化一下

这里的报错应该是说这request中把selector加入进去会报错,你可以尝试将html加入进去,然后到另一个函数的时候使用selector重新通过这个html实例化一下